Deep Learning Based Frameworks for the Detection and Classification of Soniferous Fish

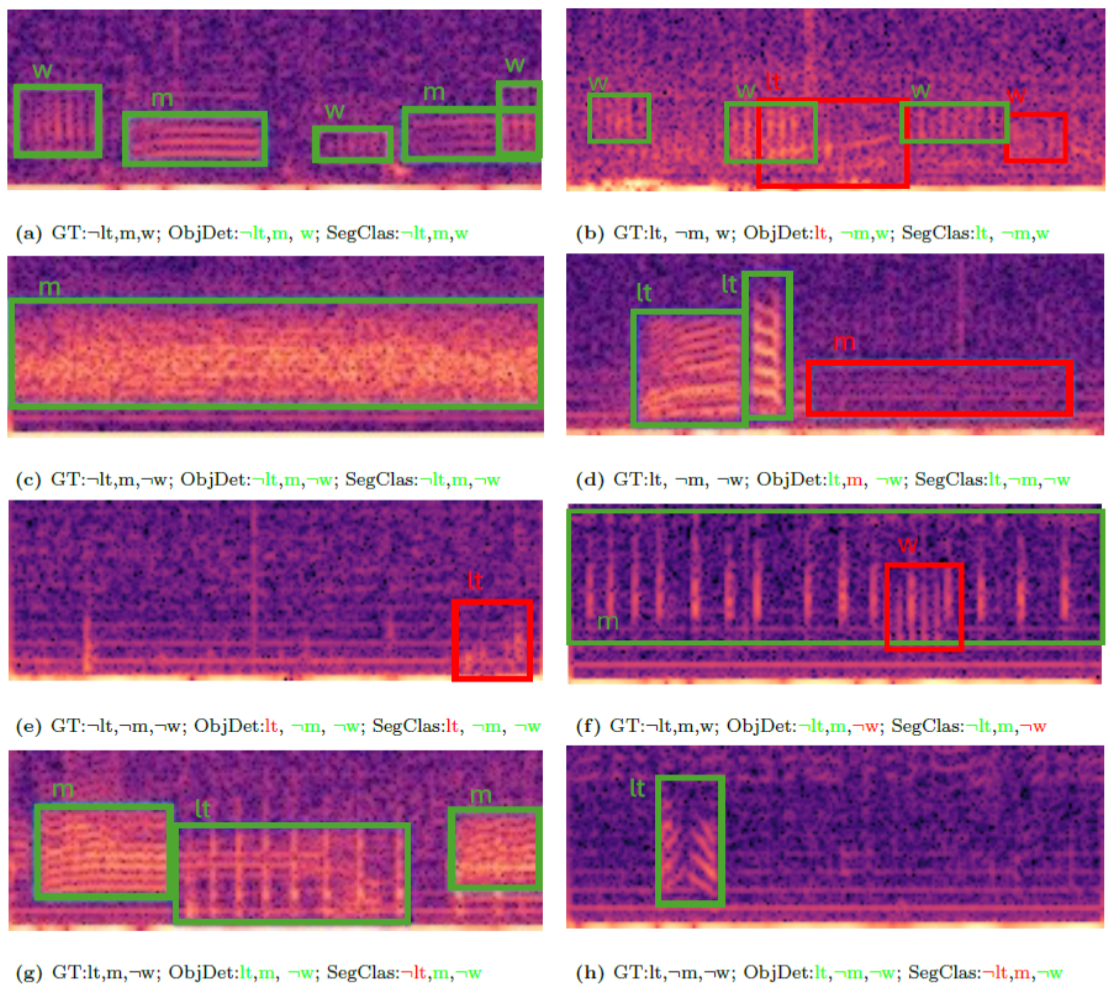

Passive Acoustic Monitoring (PAM) is emerging as a valuable tool for assessing fish pop- ulations in natural habitats. This study compares two deep learning-based frameworks: (1) a multi-label classification system (SegClas) combining Convolutional Neural Networks (CNNs) and Long Short Term Memory (LSTM) networks and, (2) an object detection ap- proach (ObjDet) using a YOLO-based model to detect, classify, and count sounds produced by soniferous fish in the Tagus estuary, Portugal. The target species-Lusitanian toadfish (Halobatrachus didactylus), meagre (Argyrosomus regius), and weakfish (Cynoscion regalis)- exhibit overlapping vocalization patterns, posing classification challenges. Results show both methods achieve high accuracy (over 96%) and F1 scores above 87% for species-level sound identification, demonstrating their effectiveness under varied noise conditions. ObjDet gen- erally offers slightly higher classification performance (F1 up to 92%) and can annotate each vocalization for more precise counting. However, it requires bounding-box annotations and higher computational costs (inference time of ca. 1.95 seconds per hour of recording). In contrast, SegClas relies on segment-level labels and provides faster inference (ca. 1.46 sec- onds per hour). This study also compares both counting strategies, each offering distinct advantages for different ecological and operational needs. Our results highlight the potential of deep learning-based PAM for fish population assessment.